Section: New Results

Semi and non-parametric methods

Harmony Search with Differential Mutation Based Pitch Adjustment

Participants : Kai Qin, Florence Forbes.

Harmony search (HS), as an emerging metaheuristic technique mimicking the improvisation behavior of musicians, has demonstrated strong efficacy of solving various numerical and real-world optimization problems. This work [36] presents a harmony search with differential mutation based pitch adjustment (HSDM) algorithm, which improves the original pitch adjustment operator of HS using the self-referential differential mutation scheme that features differential evolution - another celebrated metaheuristic algorithm. In HSDM, the differential mutation based pitch adjustment can dynamically adapt the properties of the landscapes being explored at different searching stages. Meanwhile, the pitch adjustment operator's execution probability is allowed to vary randomly between 0 and 1, which can maintain both wild and fine exploitation throughout the searching course. HSDM has been evaluated and compared to the original HS and two recent HS variants using 16 numerical test problems of various searching landscape complexities at 10 and 30 dimensions. HSDM consistently demonstrates superiority on most of test problems.

Dynamic Regional Harmony Search Algorithm with Opposition and Local Learning

Participants : Kai Qin, Florence Forbes.

To deal with the deficiencies associated with the original Harmony Search (HS) such as premature convergence and stagnation, a dynamic regional harmony search (DRHS) algorithm incorporating opposition and local learning is proposed [35] . DRHS utilizes the opposition-based initialization, and performs independent HS with respect to multiple groups that are randomly recreated on a fixed period basis. Besides the traditional harmony improvisation operators, an opposition based harmony creation scheme is introduced to update the group memory. Any prematurely converged group will be restarted with the doubled size to further augment its exploration capability. Local search is periodically applied to exploit promising regions around top-ranked candidate solutions. The performance of DRHS has been evaluated and compared to HS using 12 numerical test problems at 10D and 30D, which are taken from the CEC2005 benchmark. DRHS consistently demonstrate superiority to HR over all the test problems at both 10D and 30D.

Evolutionary algorithms with CUDA

Participants : Kai Qin, Federico Raimondo.

Evolutionary algorithms (EAs), inspired by natural evolution processes, have demonstrated strong efficacy for solving various real-world optimization problems, although their practical use may be constrained by their computation efficiency. In fact, EAs are inherently parallelizable due to the operations at the individual element level and population-wise evolution. However, most of the existing EAs are designed and implemented in the sequential manner mainly because hardware platforms supporting parallel computing tasks and software platforms facilitating parallel programming tasks are not prevalently available.

In recent year, the graphics processing unit (GPU) has emerged as a powerful general-purpose computation device that can favorably support massively data parallel computing tasks carried out on its hundreds of cores. The compute unified device architecture (CUDA) technology invented by NVIDIA provides an intuitive way to express parallelism and to implement parallel programs using some popular programming languages, such as C, C++ and FORTRAN. Accordingly, we can simply write a program for one data elements, which gets automatically distributed across hundreds of cores for thousands of threads to execute. Although the CUDA programming model is easy-to-use, the computation efficiency of CUDA parallel programs crucially depends on careful consideration of hardware characteristics of GPUs during algorithmic design and implementation, especially about memory utilization and thread management (to maximize the occupancy of streaming multi-processors). Without proper considerations, the parallel programs may even run slower than their sequential counterparts.

The objectives of our project are to: 1. Redesign state-of-the-art EAs using CUDA under thorough consideration of GPU's hardware characteristics. 2. Develop a generic hardware-self-configurable EA framework, which allows automatically configuring available hardware computing resources to maximize the computation efficiency of the EA.

Currently, we had developed a memory-efficient parallel differential evolution algorithm, which features maximally utilizing the available shared memory in GPU while maximally reducing the use of the global memory in GPU considering its very limited access bandwidth. Compared with two recent parallel differential evolution algorithms implemented with CUDA in 2010 and 2011, our algorithm demonstrated significantly faster computation speed. We had also investigated the parallel implementation of test problems and provided a guideline on how to implement any user-defined test problem and combine it with an existing parallel EA framework. To the best of our knowledge, this is the first research work on this topic.

Modelling extremal events

Participants : Stéphane Girard, Laurent Gardes, Jonathan El-methni, El-Hadji Deme.

Joint work with: Guillou, A. (Univ. Strasbourg).

We introduced a new model of tail distributions depending on two parameters and [16] . This model includes very different distribution tail behaviors from Fréchet and Gumbel maximum domains of attraction. In the particular cases of Pareto type tails () or Weibull tails (), our estimators coincide with classical ones proposed in the literature, thus permitting us to retrieve their asymptotic normality in an unified way. The first year of the PhD work of Jonathan El-methni has been dedicated to the definition of an estimator of the parameter . This permits the construction of new estimators of extreme quantiles. The results are submitted for publication [48] . Our future work will consist in proposing a test procedure in order to discriminate between Pareto and Weibull tails.

We are also working on the estimation of the second order parameter (see paragraph 3.3.1 ). We proposed a new family of estimators encompassing the existing ones (see for instance [62] , [61] ). This work is in collaboration with El-Hadji Deme, a PhD student from the Université de Saint-Louis (Sénégal). El-Hadji Deme obtained a one-year mobility grant to work within the Mistis team on extreme-value statistics. The results are submitted for publication [46] .

Conditional extremal events

Participants : Stéphane Girard, Laurent Gardes, Gildas Mazo, Jonathan El-methni.

Joint work with: J. Carreau, A. Lekina, Amblard, C. (TimB in TIMC laboratory, Univ. Grenoble I) and Daouia, A. (Univ. Toulouse I)

The goal of the PhD thesis of Alexandre Lekina is to contribute to the development of theoretical and algorithmic models to tackle conditional extreme value analysis, ie the situation where some covariate information is recorded simultaneously with a quantity of interest . In such a case, the tail heaviness of depends on , and thus the tail index as well as the extreme quantiles are also functions of the covariate. We combine nonparametric smoothing techniques [58] with extreme-value methods in order to obtain efficient estimators of the conditional tail index and conditional extreme quantiles. When the covariate is random (random design) and the tail of the distribution is heavy, we focus on kernel methods [14] . We extension to all kind of tails in investigated in [45] .

Conditional extremes are studied in climatology where one is interested in how climate change over years might affect extreme temperatures or rainfalls. In this case, the covariate is univariate (time). Bivariate examples include the study of extreme rainfalls as a function of the geographical location. The application part of the study is joint work with the LTHE (Laboratoire d'étude des Transferts en Hydrologie et Environnement) located in Grenoble.

More future work will include the study of multivariate and spatial extreme values. With this aim, a research on some particular copulas [1] has been initiated with Cécile Amblard, since they are the key tool for building multivariate distributions [64] . The PhD theses of Jonathan El-methni and Gildas Mazo should address this issue too.

Level sets estimation

Participants : Stéphane Girard, Laurent Gardes.

Joint work with: Guillou, A. (Univ. Strasbourg), Stupfler, G. (Univ. Strasbourg), P. Jacob (Univ. Montpellier II) and Daouia, A. (Univ. Toulouse I).

The boundary bounding the set of points is viewed as the larger level set of the points distribution. This is then an extreme quantile curve estimation problem. We proposed estimators based on projection as well as on kernel regression methods applied on the extreme values set, for particular set of points [10] .

In collaboration with A. Daouia, we investigate the application of such methods in econometrics [41] : A new characterization of partial boundaries of a free disposal multivariate support is introduced by making use of large quantiles of a simple transformation of the underlying multivariate distribution. Pointwise empirical and smoothed estimators of the full and partial support curves are built as extreme sample and smoothed quantiles. The extreme-value theory holds then automatically for the empirical frontiers and we show that some fundamental properties of extreme order statistics carry over to Nadaraya's estimates of upper quantile-based frontiers.

In the PhD thesis of Gilles Stupfler (co-directed by Armelle Guillou and Stéphane Girard), new estimators of the boundary are introduced. The regression is performed on the whole set of points, the selection of the “highest” points being automatically performed by the introduction of high order moments. The results are submitted for publication [51] .

Quantifying uncertainties on extreme rainfall estimations

Participants : Laurent Gardes, Stéphane Girard.

Joint work with: Carreau, J. (Hydrosciences Montpellier) and Molinié, G. from Laboratoire d'Etude des Transferts en Hydrologie et Environnement (LTHE), France.

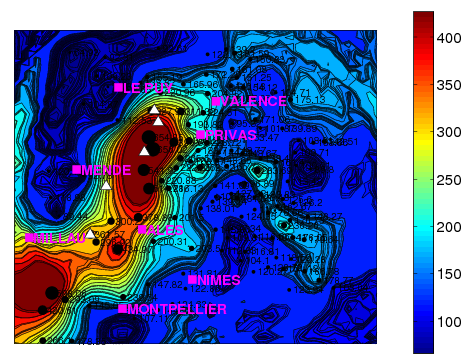

Extreme rainfalls are generally associated with two different precipitation regimes. Extreme cumulated rainfall over 24 hours results from stratiform clouds on which the relief forcing is of primary importance. Extreme rainfall rates are defined as rainfall rates with low probability of occurrence, typically with higher mean return-levels than the maximum observed level. For example Figure 2 presents the return levels for the Cévennes-Vivarais region obtained in [14] . It is then of primary importance to study the sensitivity of the extreme rainfall estimation to the estimation method considered.

The obtained results are published in [13] .

Retrieval of Mars surface physical properties from OMEGA hyperspectral images.

Participant : Stéphane Girard.

Joint work with: Douté, S. from Laboratoire de Planétologie de Grenoble, France and Saracco, J (University Bordeaux).

Visible and near infrared imaging spectroscopy is one of the key techniques to detect, to map and to characterize mineral and volatile (eg. water-ice) species existing at the surface of planets. Indeed the chemical composition, granularity, texture, physical state, etc. of the materials determine the existence and morphology of the absorption bands. The resulting spectra contain therefore very useful information. Current imaging spectrometers provide data organized as three dimensional hyperspectral images: two spatial dimensions and one spectral dimension. Our goal is to estimate the functional relationship between some observed spectra and some physical parameters. To this end, a database of synthetic spectra is generated by a physical radiative transfer model and used to estimate . The high dimension of spectra is reduced by Gaussian regularized sliced inverse regression (GRSIR) to overcome the curse of dimensionality and consequently the sensitivity of the inversion to noise (ill-conditioned problems). We have also defined an adaptive version of the method which is able to deal with block-wise evolving data streams [28] .

Statistical modelling development for low power processor.

Participant : Stéphane Girard.

Joint work with: A. Lombardot and S. Joshi (ST Crolles).

With scaling down technologies to the nanometer regime, the static power dissipation in semiconductor devices is becoming more and more important. Techniques to accurately estimate System On Chip static power dissipation are becoming essential. Traditionally, designers use a standard corner based approach to optimize and check their devices. However, this approach can drastically underestimate or over-estimate process variations impact and leads to important errors.

The need for an effective modeling of process variation for static power analysis has led to the introduction of Statistical static power analysis. Some publication state that it is possible to save up to 50% static power using statistical approach. However, most of the statistical approaches are based on Monte Carlo analysis, and such methods are not suited to large devices. It is thus necessary to develop solutions for large devices integrated in an industrial design flow. Our objective to model the total consumption of the circuit from the probability distribution of consumption of each individual gate. Our preliminary results are published in [18] .